Feature switching for release testing.

When your cloud deployments do not all have the same features enabled, running the same tests calls will generate different reponses. How can you ensure that the tests for each individual release verifies the expected functionality for that deployment?

The problem

It is not uncommon to deploy code to production servers that is hidden behind a feature switch. When the moment comes to go live, the configuration is changed to enable the feature. If it is just new functionality, you might use a test filter on releases to only run the tests for the currently enabled features.

But what if you have multiple deployments for different customers, each customised with the features that they have chosen to activate. How do you go about ensuring that each deployment functions as it should?

Option 1: Run only the tests for the enabled features

Give tests a category based upon the feature they test. In the release definition pick to run only the tests for the selected features.

Advantages:

Simplicity. No need to do anything new.

Positively checks that enabled features are working.

Disadvantages:

Does not validate that features are disabled as expected: Inadvertantly enabling an additional feature would not fail tests and block the release.

Putting it bluntly, you are not checking that your expensive new feature is only switched on for the customers paying for it.

Option 2: Run different tests depending on whether the feature is enabled

Now we need two test categories per feature, and two sets of tests.

Advantages:

Relatively simple to filter the required set of tests for each deployment.

Validates that features are not present

Disadvantages:

Requires writing (at least) 2 sets of tests for each feature. This will probably involve multiple copies of the same test setup, but different aserts.

The sheer quantity of new categories will quickly scale to confusing levels

Option 3: Add a feature toggle to the tests and use it to test that every environment is behaving as expected.

This time we are not filtering out the tests, we run them all on all environments. A configuration e.g. from a runsettings file passes in the state of each feature toggle and the test makes the assertions relevant to the status of the toggle.

Each test follows the same setup, (Arrange and Act steps) but then provides the alternative asserts for the different states of the toggle.

Advantages:

Every test is run on every release

Validates that features are not present or present as expected

Disadvantages:

Requires writing alternative assertions for the same tests

Tests are more complex and harder to understand.

Of course, it might not be a two way toggle

Following on from my last post about testing cultures, it could alternatively be the list of supported languages, or the default culture.

Implementation notes.

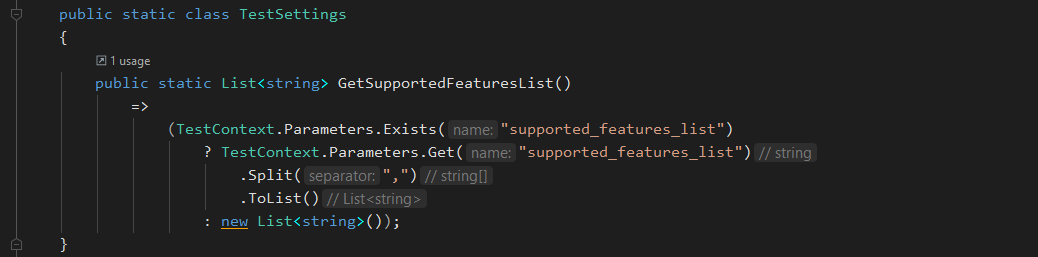

I have chosen to follow the third approach here: Setting up my nUnit TestSettings code to provide a list of supported features for each environment.

The test setting should be provided as a comma separated list of feature names.

using System;

using System.Collections.Generic;

using System.Linq;

using NUnit.Framework;

namespace AlexanderOnTest.CultureTestSupport

{

public static class TestSettings

{

public static List<string> GetSupportedFeaturesList()

=>

(TestContext.Parameters.Exists("supported_features_list")

? TestContext.Parameters.Get("supported_features_list")

.Split(",")

.ToList()

: new List<string>());

}

}

I also do the same to provide a list of supported languages.

Then within my tests something like this:

using System.Collections.Generic;

using System.Net;

using FluentAssertions;

using FluentAssertions.Execution;

using NUnit.Framework;

namespace AlexanderOnTest.CultureTestSupport

{

public class FeatureBasedTests

{

private List<string> SupportedFeatures = TestSettings.GetSupportedFeaturesList();

private bool IsEnterpriseFeatureEnabled => SupportedFeatures.Contains("EnterpriseFeature1");

[Test]

public void EnterpriseFeature1IsEnabledIfRequired()

{

//Arrange

ApiResponse expectedEnabledResponse = new ApiResponse()

{

// Set up expected response when enabled

};

ApiResponse expectedDisabledResponse = new ApiResponse()

{

// Set up expected response when disabled

};

//Act

(HttpStatusCode status, ApiResponse actualResponse, ApiErrorResponse errorResponse) = ;//make api call here;

TestContext.WriteLine($"Response: ${actualResponse}");

//Assert

using (new AssertionScope())

{

status.Should().Be(HttpStatusCode.OK);

if (IsEnterpriseFeatureEnabled)

{

actualResponse.Should().Be(expectedEnabledResponse);

}

else

{

actualResponse.Should().Be(expectedDisabledResponse);

}

}

}

}

}

Final thoughts

- I recommend being as fine grained as possible in the feature list. You never know when that ‘Enterprise’ feature will be unlocked for the ‘SME’ version

- Resist the DevOps team / senior devs suggesting that you should configure the tests automatically based in the application feature list – They are still black box tests checking that things are working AS EXPECTED not AS CONFIGURED.

Did I miss anything or make a mistake?

Do you have a better plan? Please do let me know, in the comments below or on Twitter if I have made any mistakes or missed anything helpful.

Writing code that works for this is a lot easier than working out what configurability is required.

Progress made:

- Defined a process to tailor testing to the enabled feature set enabled on any particular deployment

Lessons learnt:

- Testing for different functionality on different servers is a challenging and complex issue

- Even with a plan, getting it right is difficult especially when trying to anticipate all the combinations that might arise

- Providing a means e.g. a test setting that can be configured in the release without a code check in can save a lot of trouble

A reminder:

If you want to ask me a question, Twitter is undoubtedly the fastest place to get a response: My Username is @AlexanderOnTest so I am easy to find. My DMs are always open for questions, and I publicise my new blog posts there too.